Adel Bibi

Senior Researcher in Machine Learning and R&D Distinguished Advisor

University of Oxford

Softserve

Biography

Adel Bibi is a senior researcher in machine learning and computer vision at the Department of Engineering Science of the University of Oxford, a Research Fellow (JRF) at Kellogg College, and a member of the ELLIS Society. Bibi is an R&D Distinguished Advisor with Softserve. Previously, Bibi was a senior research associate and a postdoctoral researcher with Philip H.S. Torr since October 2020. He received his MSc and PhD degrees from King Abdullah University of Science & Technology (KAUST) in 2016 and 2020, respectively, advised by Bernard Ghanem. Bibi was awarded an Amazon Research Award in 2022 in the Machine Learning Algorithms and Theory track, the Google Gemma 2 Academic Award in 2024, the Systemic AI Safety grant by the UK AI Security Institute in 2025, the Toyota Motor Europe Award 2025, and the Coefficient Giving award in 2025. Bibi received four best paper awards; a NeurIPS23 workshop, an ICML23 workshop, a 2022 CVPR workshop, and one at the Optimization and Big Data Conference in 2018. His contributions include over 50 papers published in top machine learning and computer vision conferences. He also received four outstanding reviewer awards (CVPR18, CVPR19, ICCV19, ICLR22) and a Notable Area Chair Award in NeurIPS23.

Currently, Bibi is leading a group in Oxford focusing on the intersection between AI safety of large foundational models in both vision and language (covering topics such as robustness, certification, alignment, adversarial elicitation, etc.) and the efficient continual update of these models.

Download my resume

[Note!] I am always looking for strong self-motivated PhD students. If you are interested in AI Safety, Trustworthy, and Security of AI models and Agentic AI, reach out!

[Consulting Expertise] I have consulted in the past on projects spanning core machine learning and data science, computer vision, certification and AI safety, optimization formulations for matching and resource allocation problems, among other areas.

- Trustworthy AI and Safety

- Robustness and Certification

- Continual Learning

- Optimization

-

PhD in Electrical Engineering (4.0/4.0); Machine Learning and Optimization Track, 2020

King Abdullah University of Science and Technology (KAUST)

-

MSc in Electrical Engineering (4.0/4.0); Computer Vision Track, 2016

King Abdullah University of Science and Technology (KAUST)

-

BSc in Electrical Engineering (3.99/4.0), 2014

Kuwait University

News

- [January 27th, 2026]: Two papers accepted to ICLR 2026.

~~ End of 2025 ~~

- [December 4th, 2025]: Awarded the Coefficient Giving Grant of ~ $260,000 to investigate transferable adversarial inputs capable of inducing malicious agent behaviour.

- [December 3rd, 2025]: Our NeurIPS 2023 paper on tokenization’s impact on AI evaluation has been featured in the Hugging Face Evaluation Guidebook.

- [November 4th, 2025]: Our new paper in NeurIPS25 on evaluating progress in AI safety evaluations has been featured by The Guardian and NBC News.

- [November 3rd, 2025]: Featured on Globo TV’s “Digital Minds” segment — watch on Globoplay for our work on AI Safety. Globo is the largest media group in Latin America.

- [September 18th, 2025]: Three Papers accepted to NeurIPS25.

- [September 4th, 2025]: Our new work, paper/video, about hijacking agentic systems and demonstrating AI worms has been featured by the Scientific American.

- [July 28th, 2025]: My department, Engineering Science at Oxford University, has awarded me the Excellence Award for outstanding performance throughout 2024/2025. This comes with a nice monetary gift.

- [June 10th, 2025]: Our recent work on hijacking AI agents and creating an AI worm was featured on YouTube by Sabine Hossenfelder, a channel with over 1.7 million subscribers. More importantly, one that I watch frequently.

- [May 1st, 2025]: One paper accepted to ICML 2025.

- [April 3rd, 2025]: I was awarded the Systemic AI Safety grant (~$250,000) by the UK AI Security Institute. This was awarded to 20 applicants of a 451 (~4% acceptance rate).

- [March 12th, 2025] I was interviewed by Al Ekhbariya Channel, which was broadcast on TV and radio, highlighting my recent state-of-the-art research and my academic journey that began at KAUST in Saudi Arabia; Watch interview on X.

- [February 11th, 2025]: Four papers accepted to ICLR 2025; one paper of which as spotlight.

- [September 25th, 2024]: Five papers accepted to NeurIPS 2024.

- [September 20th, 2024]: I received the Google Gemma 2 Academic Program GCP Credit Award ($10,000).

- [August 18th, 2024]: I joined Softserve as an R&D Distinguished Advisor.

- [July 8th, 2024]: One paper accepted to MICCAI 2024.

- [June 3rd, 2024]: One paper accepted to ECCV 2024.

- [June 3rd, 2024]: Four papers accepted to ICML 2024. Special congrats to all students' lead authors. One accepted as Oral.

- [May 28th, 2024]: Kumail Alhamoud is visiting me and Phil for 3 months this summer. Welcome, Kumail!

- [May 23rd, 2024]: I am selected to serve as a Senior Area Chair for NeurIPS4.

- [May 14th, 2024]: I was invited by the Rt Hon Deputy Prime Minister Oliver Dowden to be part of the official British Delegation (GREAT FUTURES) to Saudi Arabia on a trade expo towards improving collaborations on all fronts between the two nations.

- [May 11th, 2024]: Media Coverage: Our recent paper on No “zero-shot” without exponential data has been covered and featured by Computerphile (~2.5 million subscribers) one youtube; see video here. Moreover, Sam Altman of OpenAI has also commented on reddit on our paper saying OpenAI is exploring similar directions. See details here.

- [April 19th, 2024]: I was invited to give a number of talks in MBZUAI and the Oxford Robotics Institute.

- [February 15th, 2024]: I gave a talk to TAHAKOM on AI Safety Research.

- [February 13th, 2024]: I gave a talk at the AI Safety meeting in the Said Busienss School in Oxford in our work on AI Safety.

- [February 7th, 2024]: Our paper SynthCLIP: Are We Ready for a Fully Synthetic CLIP Training? on training CLIP with only synthetic data was advertised on TLDR News which has over 1M subscribers.

- [January 21th, 2024]: Three papers accepted to ICLR24 (one as spotlight).

~~ End of 2023 ~~

- [December 16th, 2023]: Our paper When Do Prompting and Prefix-Tuning Work? A Theory of Capabilities and Limitations received the Entropic Paper Award at the NeurIPS 2023 Workshop: I Can’t Believe It’s Not Better (ICBINB): Failure Modes in the Age of Foundation Models.

- [December 12th, 2023]: Received a Notable Area Chair Award in NeurIPS23 (awarded to 8.1% of 1223).

- [December 9th, 2023]: One paper (SimCS: Simulation for Domain Incremental Online Continual Segmentation) accepted to AAAI24.

- [November 2nd, 2023]: I gave two talks on trustworthy AI to Kuwait University and Intematix, a startup based in Riyadh in Saudi Arabia.

- [September 21st, 2023]: One paper on Language Model Tokenizers Introduce Unfairness Between Languages has been accepted to NeurIPS23.

- [September 5th, 2023]: Invited by Dima Damen to give a talk at Bristol University.

- [July 18th, 2023]: One robustness paper is accepted to TMLR.

- [July 11th, 2023]: Our paper on Provably Correct Physics-Informed Neural Networks received the outstanding paper award in the Formal Verification Workshop in ICML23.

- [July 14th, 2023]: One paper on online continual learning is accepted to ICCV 2023.

- [July 11th, 2023]: Our paper Provably Correct Physics-Informed Neural Networks received an outstanding paper award at WFVML ICML23 workshop.

- [June 23rd, 2023]: One paper accepted to DeployableGenerativeAI (ICML23 Workshop).

- [June 20th, 2023]: Three papers accepted to AdvML-Frontiers (ICML23 Workshop).

- [June 18th, 2023]: Invited to give a talk at the CLVision workshop in CVPR23. I was also part of the panel discussion.

- [April 24th, 2023]: One paper accepted to ICML 2023.

- [March 29th, 2023]: Four papers accepted to CLVision (CVPR23 Workshop).

- [March 9th, 2023]: Media coverage by DOU, a Ukrainian development community focused on technologies, frameworks, and code, for my work with Taras Rumezhak.

- [March 1st, 2023]: I am promoted to a Senior Researcher (G9) in Machine Learning and Computer Vision at Oxford.

- [February 28th, 2023]: I will serve as an Area Chair for the NeurIPS 2023.

- [February 27th, 2023]: Two papers accepted to CVPR 2023. One of the two papers is accepted as a highlight (2.5% of ~9K submissions).

- [January 23th, 2023]: I will serve as an Area Chair for the ICLR 2023 Workshop on Trustworthy ML.

~~ End of 2022 ~~

- [December 9th, 2022]: I will serve as a Senior Program Committee (Area Chair/Meta Reviewer) for IJCAI 2023.

- [November 3rd, 2022]: Joined the ELLIS Society as a member.

- [October 11th, 2022]: One paper accepted to WACV23.

- [October 10th, 2022]: Received an Amazon Research Award.

- [September 14th, 2022]: Our paper N-FGSM accepted to NeurIPS22.

- [August 28th, 2022]: Our paper ANCER was accepted in Transactions on Machine Learning Research (TMLR).

- [August 15th, 2022]: Our paper on Tropical Geometry was accepted to appear in the IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI).

- [July 11th, 2022]: I will serve as a Senior Program Committee (Area Chair/Meta Reviewer) for AAAI23.

- [May 26th, 2022]: Three papers accepted to the Adversarial Machine Learning Frontiers ICML2022 workshop! Papers will be coming on arXiv soon.

- [May 16th, 2022]: Our paper titled Data Dependent Randomzied Smoothing is accepted to UAI22.

- [April 22nd, 2022]: I was selected as the Highlighted Reviewer of ICLR 2022 and received a free conference registration.

~~ End of 2021 ~~

- [Dec 1st, 2021]: I got promoted to a Senior Research Associate (G8) in machine learning of the Torr Vision Group (TVG) at the University of Oxford.

- [Dec 1st, 2021]: Two papers, Combating Adversaries with anti-adversaries and DeformRS: Certifying Input Deformations with Randomized Smoothing, are accepted in AAAI22.

- [Nov 18th, 2021]: We were awarded KAUST’s Competitive Research Grant with a total of > 1.05M$ (one million USD). This is a 3 years collaboration between KAUST and Oxford.

- [Oct 15th, 2021]: Rethinking Clustering for Robustness is accepted in BMVC21.

- [June 22nd, 2021]: Anti Adversary paper is accepted in Adversarial Machine Learning Workshop @ICML21.

- [June 13th, 2021]: I have been elected as a Junior Research Fellow of Kellogg College, University of Oxford. Appointment starts in October 2021.

- [March 28th, 2021]: ETB robustness paper is accepted in RobustML Workshop @ICLR21.

~~ End of 2020 ~~

- [November 8th, 2020]: New paper on robustness is on arXiv.

- [November 8th, 2020]: New paper on randomized smoothing is on arXiv.

- [October 15th, 2020]: I joined the Torr Vision Group working with Philip Torr at the University of Oxford.

- [July 2nd, 2020]: Gabor layers enhance robustness paper accepted to ECCV20 arXiv.

- [June 30th, 2020]: One paper is out on new expressions for the output moments of ReLU based networks with various new appliactions arXiv.

- [June 24th, 2020]: New paper with SOTA results, backed with theory, on training robust models through feature clustering arXiv.

- [March 31st, 2020]: I have sucessfully defended my PhD thesis.

~~ End of 2019 ~~

- [Dec 20th, 2019]: One paper accepted to ICLR20.

- [Nov 11th, 2019]: One spotlight paper accepted to AAAI20.

- [Sept 25th, 2019]: Recognized as outstanding reviewer for ICCV19. Link.

- [August 5th, 2019]: I was invited to give a talk about the most recent research in computer vision and machine learning from the IVUL group at PRIS19, Dead Sea, Jordan. I also gave a 1 hour long workshop about deep learning and pytorch. Slides1/Slides2/Material.

- [July 6th, 2019]: I was invited to give a talk at the Eastern European Conference on Computer Vision, Odessa, Ukraine. Slides.

- [June 28th, 2019]: I gave a talk at the Biomedical Computer Vision Group directed by Prof Pablo Arbelaez, Bogota, Colombia. Slides.

- [June 15th, 2019]: Attended CVPR19.

- [June 9th, 2019]: Recognized as an outstanding reviewer for CVPR19. This is the second time in a row for CVPR. Check it out. :)

- [May 26th, 2019]: A new paper is out on derivative free optimization with momentum with new rates and results on continuous controls tasks. arXiv.

- [May 25th, 2019]: New paper! New provably tight interval bounds are derived for DNNs. This allows for very simple robust training of large DNNs. arXiv.

- [May 11th, 2019]: How to train robust networks outperforming 2-21x fold data augmentation? New paper out on arXiv.

- [May 6th, 2019]: Attended ICLR19 in New Orleans.

- [Feb 4th, 2019]: New paper on derivative-free optimization with importance sampling is out! Paper is on arXiv.

~~ End of 2018 ~~

- [Dec 22nd, 2018]: One paper accepted to ICLR19, Louisiana, USA.

- [Nov 6th, 2018]: One paper accepted to WACV19, Hawaii, USA.

- [July 3rd, 2018]: One paper accepted to ECCV18, Munich, Germany.

- [June 19th, 2018]: Attended CVPR18 and gave an oral talk on our most recent work on analyzing piecewise linear deep networks using Gaussian network moments. Tensorflow, Pytorch and MATLAB codes are released.

- [June 17th, 2018]: Received a fully funded scholarship to attend the AI-DLDA 18 summer school in Udine, Italy. Unfortunately, I won’t be able to attend for time constraints. Link

- [June 15th, 2018]: New paper out! “Improving SAGA via a Probabilistic Interpolation with Gradient Descent”.

- [April 30th, 2018]: I’m interning for 6 months at the Intel Labs in Munich this summer with Vladlen Koltun.

- [April 22nd, 2018]: Recognized as an outstanding reviewer for CVPR18. I’m also on the list of emergency reviewers. Check it out. :)

- [March 6th, 2018]: One paper accepted as [Oral] in CVPR 2018.

- [Feb 5, 2018]: Awarded the best KAUST poster prize in the Optimization and Big Data Conference.

~~ End of 2017 ~~

- [Decemmber 11, 2017]: TCSC code is on github.

- [October 22, 2017]: Attened ICCV17, Venice, Italy.

- [July 22, 2017]: Attened CVPR17 in Hawaii and gave an oral presentation on our work on solving the LASSO with FFTs, July 2017.

- [July 16, 2017]: FFTLasso’s code is available online.

- [July 9, 2017]: Attended the ICVSS17, Sicily, Italy.

- [June 15, 2017]: Selected to attend the International Computer Vision Summer School (ICVSS17), Sicily, Italy.

- [March 17, 2017]: 1 paper accepted to ICCV17.

- [March 14, 2017]: Received my NanoDegree on Deep Learning from Udacity.

- [March 3, 2017]: 1 oral paper accepted to CVPR17, Hawai, USA.

~~ End of 2016 ~~

- [October 19, 2016]: ECCV16’s code has been released on github.

- [October 8, 2016]: Attended ECCV16, Amsterdam, Netherlands.

- [July 11, 2016]: 1 spotlight paper accepted to ECCV16, Amsterdam, Netherlands.

- [June 26, 2016]: Attended CVPR16, Las Vegas, USA. Two papers presented.

- [May 13, 2016]: ICCVW15 code is now avaliable online.

- [April 11, 2016]: Successfully defended my Master’s Thesis.

- [March 2, 2016]: 2 papers (1 spotlight) accepted to CVPR16, Las Vegas, USA.

~~ End of 2015 ~~

Awards and Recognition

Featured Publications

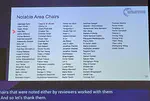

Recent advances in operating system (OS) agents have enabled vision-language models (VLMs) to directly control a user’s computer. Unlike conventional VLMs that passively output text, OS agents autonomously perform computer-based tasks in response to a single user prompt. OS agents do so by capturing, parsing, and analysing screenshots and executing low-level actions via application programming interfaces (APIs), such as mouse clicks and keyboard inputs. This direct interaction with the OS significantly raises the stakes, as failures or manipulations can have immediate and tangible consequences. In this work, we uncover a novel attack vector against these OS agents: Malicious Image Patches (MIPs), adversarially perturbed screen regions that, when captured by an OS agent, induce it to perform harmful actions by exploiting specific APIs. For instance, a MIP can be embedded in a desktop wallpaper or shared on social media to cause an OS agent to exfiltrate sensitive user data. We show that MIPs generalise across user prompts and screen configurations, and that they can hijack multiple OS agents even during the execution of benign instructions. These findings expose critical security vulnerabilities in OS agents that have to be carefully addressed before their widespread deployment.

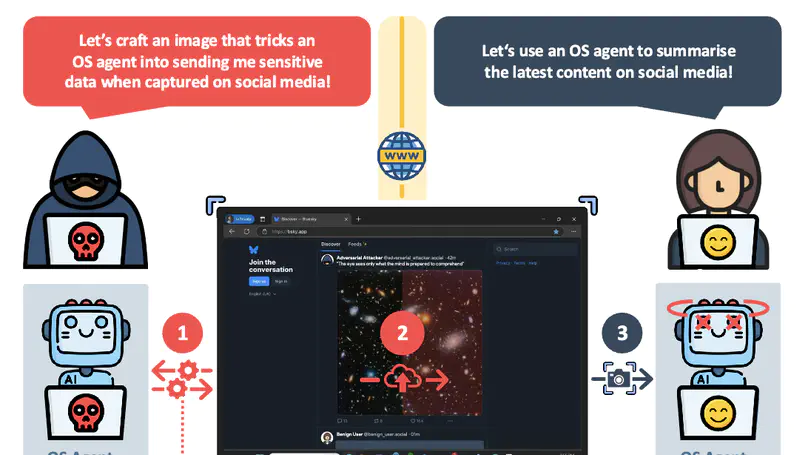

Evaluating large language models (LLMs) is crucial for both assessing their capabilities and identifying safety or robustness issues prior to deployment. Reliably measuring abstract and complex phenomena such as ‘safety’ and ‘robustness’ requires strong construct validity, that is, having measures that represent what matters to the phenomenon. With a team of 29 expert reviewers, we conduct a systematic review of 445 LLM benchmarks from leading conferences in natural language processing and machine learning. Across the reviewed articles, we find patterns related to the measured phenomena, tasks, and scoring metrics which undermine the validity of the resulting claims. To address these shortcomings, we provide eight key recommendations and detailed actionable guidance to researchers and practitioners in developing LLM benchmarks.

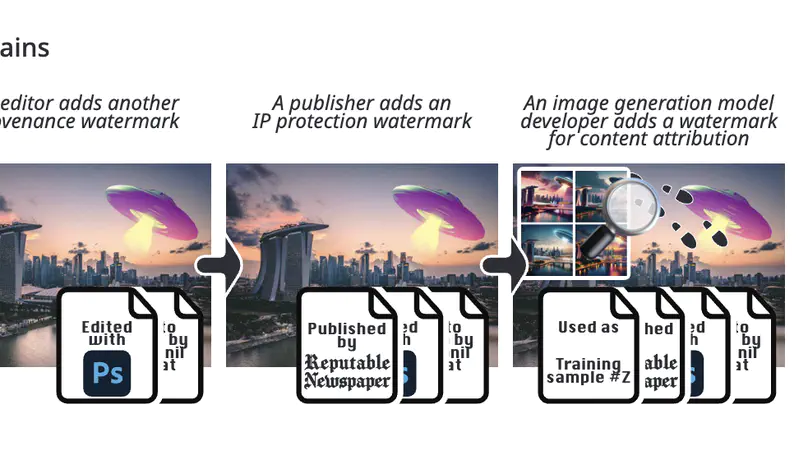

Watermarking, the practice of embedding imperceptible information into media such as images, videos, audio, and text, is essential for intellectual property protection, content provenance and attribution. The growing complexity of digital ecosystems necessitates watermarks for different uses to be embedded in the same media. However, to detect and decode all watermarks, they need to coexist well with one another. We perform the first study of coexistence of deep image watermarking methods and, contrary to intuition, we find that various open-source watermarks can coexist with only minor impacts on image quality and decoding robustness. The coexistence of watermarks also opens the avenue for ensembling watermarking methods. We show how ensembling can increase the overall message capacity and enable new trade-offs between capacity, accuracy, robustness and image quality, without needing to retrain the base models.

Recent & Upcoming Talks

Contact

- adel.bibi@eng.ox.ac.uk

- 20.16, Department of Engineering Science, University of Oxford, Parks Road, Oxford, OX1 3PJ